Canonical correlation inference for mapping abstract scenes to Text

Description

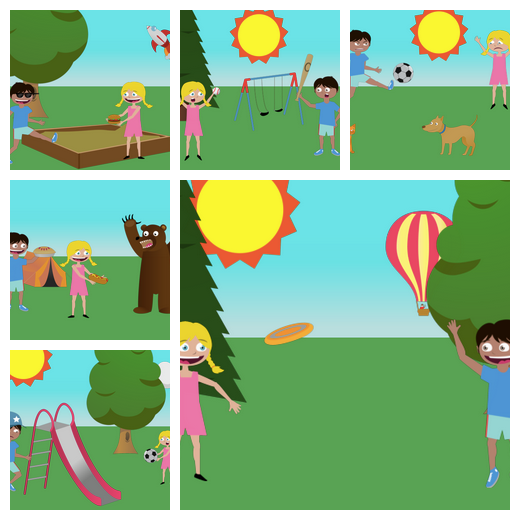

This project focuses on the use of canonical correlation analysis to map images and text to a shared space, and then use this shared space to map unseen images to corresponding captions. The dataset we use is abstract scene dataset, developed at Microsoft, for which we show a couple of pictures below.

Paper

Click here for the following paper.

@inproceedings{papasarantopoulos-18,

title={Canonical Correlation Inference for Mapping Abstract Scenes to Text},

author={Nikos Papasarantopoulos and Helen Jiang and and Shay B. Cohen},

booktitle={Proceedings of {AAAI}},

year={2018}

}

Some notes

Click here to download the ranked captions.

The format of the file is as follows. There are 300 lines, a line per ranked image. Each line has fields separated by ^. The fields are as follows:

- The image name in the <a href=https://vision.ece.vt.edu/clipart/>abstract scene dataset</a> (in the RenderedScenes/ directory)

- Gold-standard caption 1

- Gold-standard caption 2

- Gold-standard caption 3

- Gold-standard caption 4

- Gold-standard caption 5

- Gold-standard caption 6

- Gold-standard caption 7

- Gold-standard caption 8

- Gold-standard caption that was rated

- The caption from Ortiz et al. (statistical machine translation system) that was rated

- The CCA caption that was rated

- Average rating (by 2-3 subjects) for the gold caption (a number between 1 - least relevant and 5 - most relevant)

- Average rating for the SMT caption

- Average rating for the CCA caption

Not all images have 8 gold-standard captions, so some can be empty.

Click here to download the splits that we used for the training/development/tuning/test sets. These are the same splits as were used by Ortiz et al. Each file in this gzipped tarball contains a list of pointers to the scenes that were used for the relevant set. The human-ranked images were taken from the test set (first 300 images).